Data analytics always comes down to numbers, which is not always intuitive for the IT administration world. We know how to work with numbers, and you can expect that there will be many scientific studies on discovering relationships and knowledge in numerical data. Our system logs, however, are strings of words, full phrases or sentences that are only filled with numbers in places.

Looking for a way to mathematically analyze the text, the Energy Logserver team used a vectorization technique. The construction of the vector allows you to convert any string of text into a number. Armed with the knowledge obtained thanks to cooperation with professors of the Warsaw University of Technology, we reached for the Word2Vec library.

Our goal is not just to convert text into numbers. In the current development work, we are looking for a convenient method of grouping input data. The desired clustering is intended to help with unattended grouping of input data. This will make it easier for the operator to see relevant data.

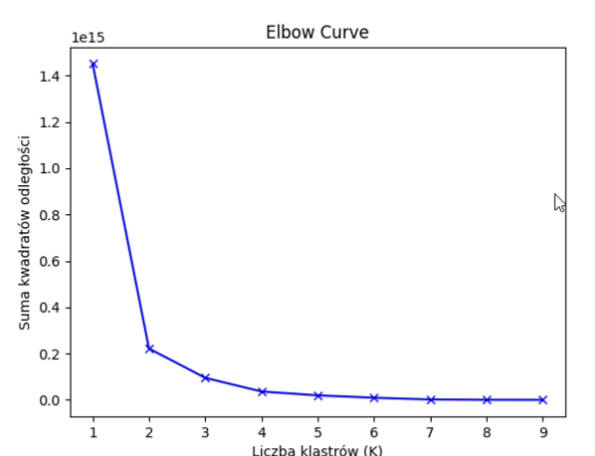

Clustering is preceded by an analysis of the number of groups proposed by the algorithm. This is done by the “elbow” method.

Example of a set of vectors:

-0.008289610035717487

0.007978345267474651

-0.006714770570397377

-0.008822474628686905

0.00699368491768837

-0.009639430791139603

The suggested number of groups after Word2Vec vectorization is 2-3, which is not enough. Intuitively, it is known that there are more types of events in a given sample. A clustering error was sought, so that after the analysis we returned to the problem of vectorization.

In a way, it is obvious that if the data is misplaced, it will not be possible to interpret it well. Therefore, the analytical team tested another vectorization library – TfidfVectorizer.

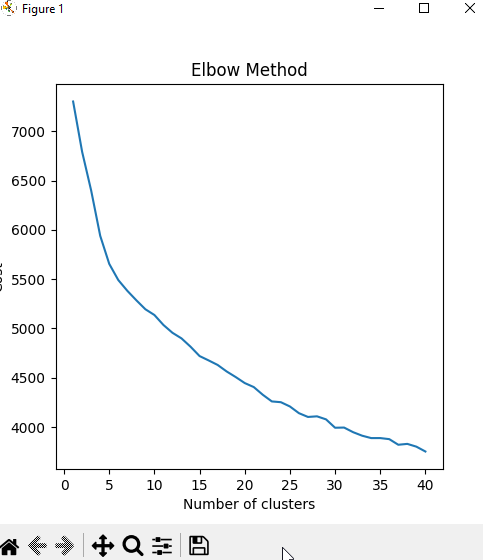

After reviewing the documentation and conducting several experiments, a significant improvement in the results was noticed.

Example vector:

0.31702341388653776

0.07234997282939204

0.2194058215727943

0.21844503185283498

0.18796067640258898

The different course shows that there are many more differences and possible groups in the input data.

Word2Vec creates vectors based on the context in which words appear in the body of texts. This model analyzes the context of the words and then assigns a vector to each word so that the words in similar contexts have similar vectors. This method is particularly useful for modeling the semantics of words, that is, for determining which words are close to each other in the sense of meaning.

TfidfVectorizer, on the other hand, uses vectors with a length equal to the number of words in the corpus. The vector for each document is created based on the frequency of words in that document and throughout the corpus. In this way, vectors for different documents represent different combinations of words. This method is especially useful for analyzing text to classify and group documents.

In summary, Word2Vec is mainly used for word semantics modeling, while TfidfVectorizer is used to analyze text to classify and group documents.